Disclaimer: In fact, plants themselves don’t speak. Rather, a generative AI reads the soil sensor data and generates messages based on that information. These messages are then automatically broadcast through smart speakers in the house whenever selected individuals approach the plant.

Here is a quick demonstration in Bangla

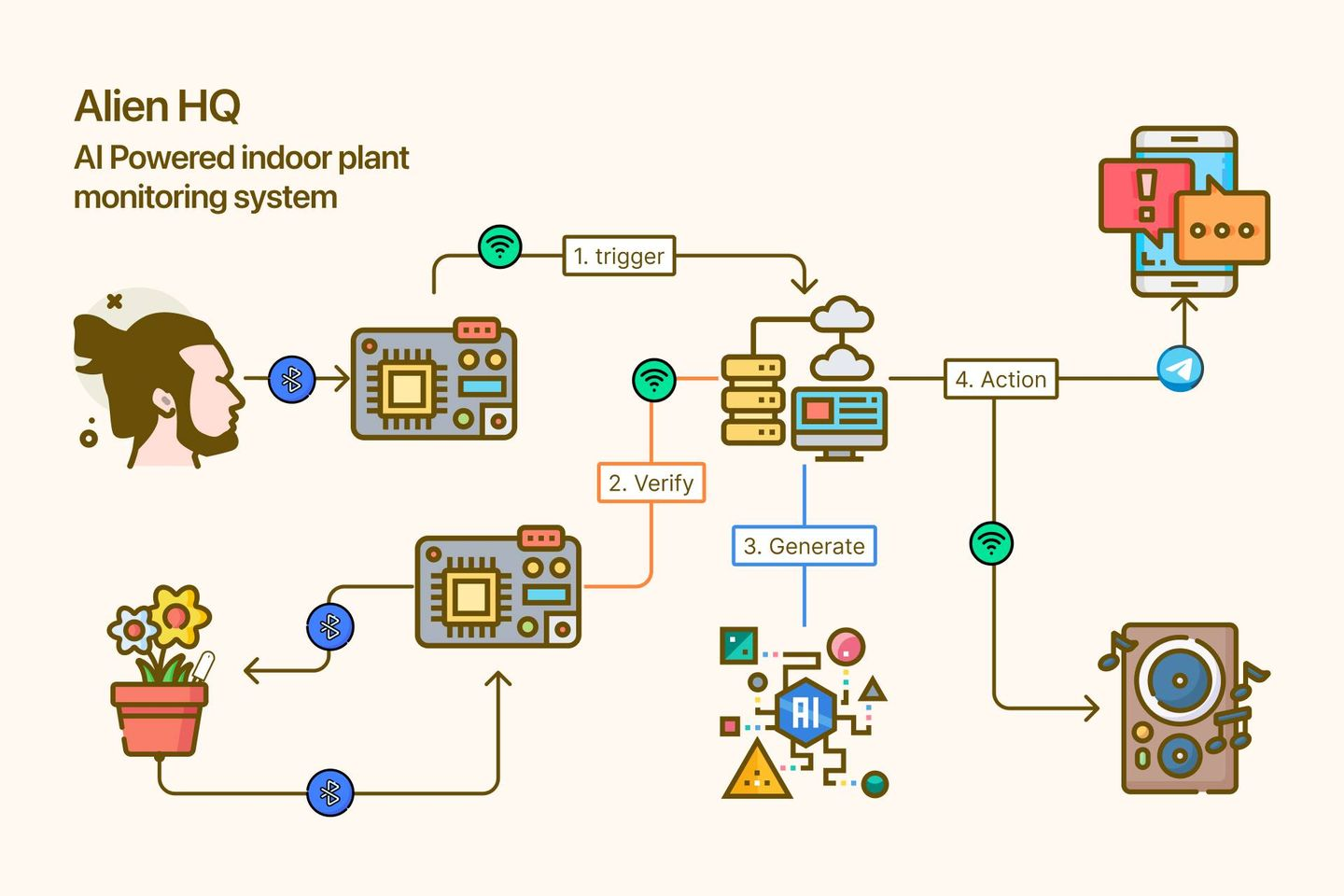

Let’s see how it works

I have a number of indoor plants in different rooms of my house. To monitor them, I bought some HHCC Flower Care soil sensors about a year ago. The mobile app allows me to see the soil moisture, fertilizer level, and other information.

Since the sensors send data to the mobile phone via Bluetooth, the range is naturally not very wide.

I had some spare ESP32 development boards in my drawer so the first thing I did was flash a few ESP32 boards and turn them into Bluetooth proxies.

These essentially take the data from the Bluetooth beacon and send it to my home assistant server via WiFi. As a result, I don’t have to go near the sensor to see the data.

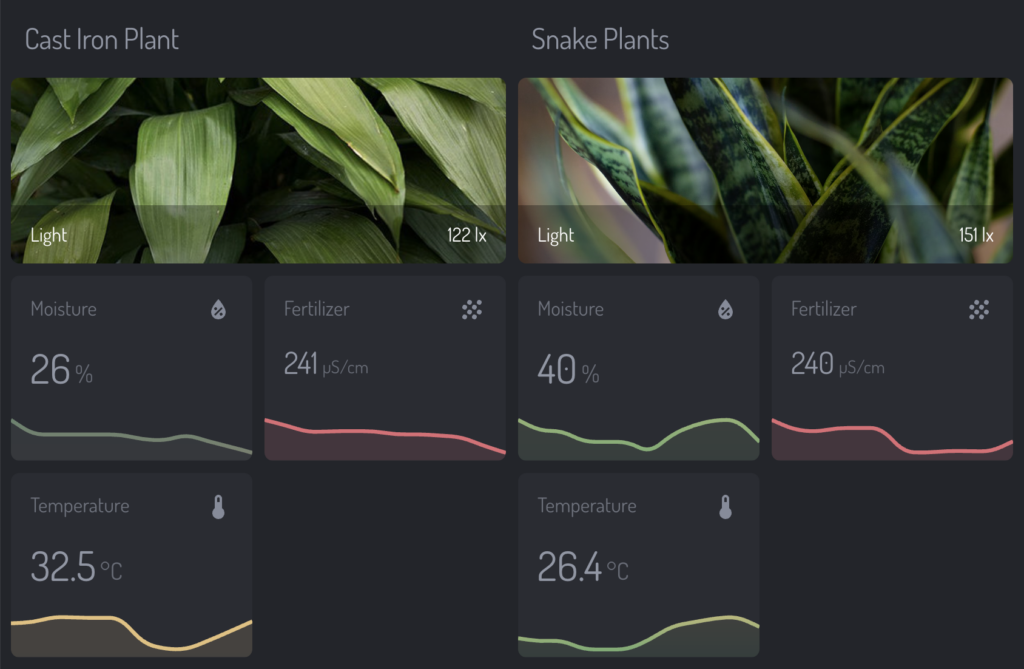

Now the data can be viewed in my home dashboard app like these.

To make the data visualization more useful, I added some color coding here. Green indicates that the level is just right, red means it is below the required level, and yellow means it is above the required level. However, this part has nothing to do with the automation but hey, why not?

type: custom:mini-graph-card

entities:

- sensor.snake_plants_moisture

name: Moisture

icon: mdi:water-percent

line_width: 8

animate: true

line_color: var(--accent-color)

color_thresholds:

- value: 10

color: '#e06c75'

- value: 30

color: '#069869'

- value: 50

color: '#e5c07b'And then I’ve also flashed couple of ESP32 boards with Espresence to be used as room presence sensors. It monitors where we (actually our phones) are and I use this data for a lot of other automations as well. Perhaps that might be a topic for another day.

The hardest part is done

Now, what we need to do now is to use AI to convert this data into dynamic sentences.

To do this, I created a very basic automation in Home Assistant. Each automation typically has three parts:

1. Trigger: When and how the automation will start. In this case, I used my phone’s Bluetooth beacon as the trigger. For example, when I enter the office room with my phone, the automation will start.

trigger:

- platform: state

entity_id:

- sensor.mamun_phone

to: office2. Condition: In this step, the automation checks to see if the conditions that are necessary for it to perform its task are met. For example, in this case, I used the condition that if the soil moisture of the snake plant in the office room is less than 25%, the automation will proceed to the next step. Otherwise, it will be ignored.

- conditions:

- condition: numeric_state

entity_id: sensor.snake_plants_moisture

below: 253. Action: This part is about what will happen if the condition is fulfilled after the automation is triggered.

Here, I want a message to be generated that makes it seem like the plant itself is asking for water if the soil moisture is less than 25%. Instead of using the same boring message every time, I am now using Google Gemini to write the messages, although I initially started with ChatGPT. Both work great in their own way.

service: conversation.process

metadata: {}

data:

agent_id: [YOUR_AI_AGENT]

text: "write a humorous notification message based on this prompt: Imagine you are my favorite Snake Plant and you are very thirsty. The soil is very dry, current moisture level is {{states.sensor.snake_plants_moisture.state }} only. It's difficult to survive in this condition. Request for water in a sentence or two like a child."

response_variable: gptplantAs a result, our AI is writing a response like this, and it is a little different each time. Isn’t that interesting?

response:

speech:

plain:

speech: "I'm so thirsty! Can you please give me some water? I'm so dry, I can barely hold on!"

extra_data: null

card: {}

language: en

response_type: action_done

data:

targets: []

success: []

failed: []Now, the final piece of the automation is converting this text to speech for broadcast.

There are many text-to-speech engines and voice models available these days. I primarily use Piper and Nabu Casa Cloud to cast messages through my Google Nest.

service: [your_tts_service]

metadata: {}

data:

cache: false

entity_id: media_player.office_speaker

message: "{{ gptplant.response.speech.plain.speech }}"

language: en-US

options:

voice: [your_preferred_voice_model]Yep, that’s exactly it! In addition, I’ve also added a few other plants with their fertilizer and light level thresholds to the automation.

Now, whenever I’m near my plants, I can hear what they need.